Exploring Shannon-Fano Coding

Shannon-Fano coding, developed by Claude Shannon and Robert Fano in the late 1940s, is one of the earliest techniques for lossless data compression. It's an entropy encoding method that assigns variable-length codes to symbols based on their probabilities of occurrence. While often outperformed by Huffman coding (which was developed slightly later and guarantees optimal prefix codes), Shannon-Fano laid important groundwork for statistical compression methods.

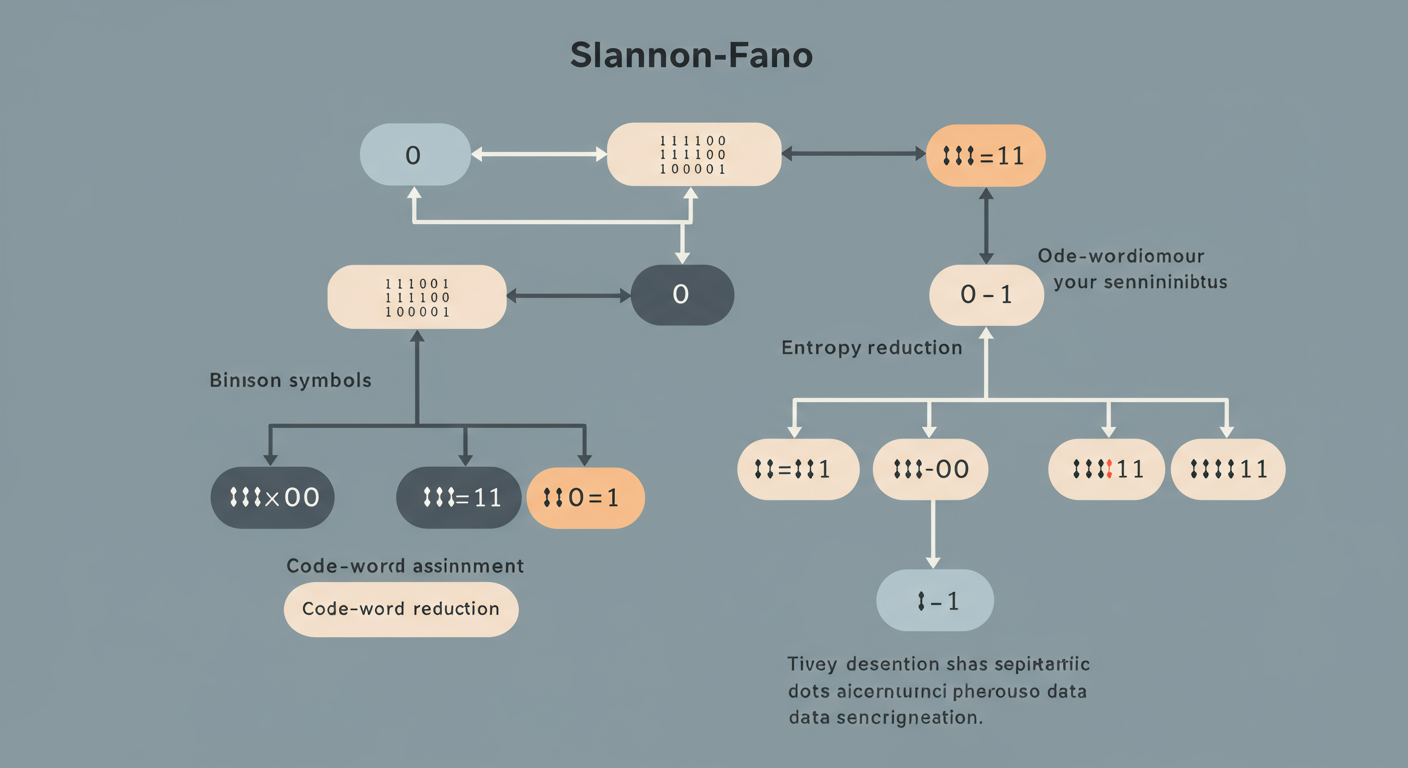

How Shannon-Fano Coding Works

The core idea of Shannon-Fano coding is to build a prefix code tree recursively. The algorithm can be summarized as follows:

- Symbol Probabilities: Start with a list of symbols and their corresponding frequencies or probabilities.

- Sort Symbols: Sort the symbols in descending order of their probabilities.

- Divide and Conquer:

- Divide the sorted list into two sub-lists such that the total probabilities of each sub-list are as close as possible.

- Assign '0' as the prefix for all symbols in the first sub-list and '1' for all symbols in the second sub-list.

- Recursively apply this division process to each sub-list until each sub-list contains only one symbol.

- Code Generation: The code for each symbol is the sequence of '0's and '1's assigned during the recursive divisions.

This process results in shorter codes for more frequent symbols and longer codes for less frequent ones, leading to overall data compression.

Example

Consider a set of symbols {A, B, C, D, E} with frequencies: A=15, B=7, C=6, D=6, E=5.

- Sorted list: A(15), B(7), C(6), D(6), E(5). Total frequency = 39.

- Divide:

- Group 1: {A(15), B(7)} (Total=22) -> Prefix '0'

- Group 2: {C(6), D(6), E(5)} (Total=17) -> Prefix '1'

- Recursive on Group 1 {A(15), B(7)}:

- A(15) -> Prefix '0' (Code for A: 00)

- B(7) -> Prefix '1' (Code for B: 01)

- Recursive on Group 2 {C(6), D(6), E(5)}:

- Group 2.1: {C(6), D(6)} (Total=12) -> Prefix '0'

- C(6) -> Prefix '0' (Code for C: 100)

- D(6) -> Prefix '1' (Code for D: 101)

- Group 2.2: {E(5)} (Total=5) -> Prefix '1' (Code for E: 11)

- Group 2.1: {C(6), D(6)} (Total=12) -> Prefix '0'

Resulting codes: A: 00, B: 01, C: 100, D: 101, E: 11. Note that Shannon-Fano doesn't always produce a unique or optimal set of codes, as the division point can sometimes be ambiguous.

Advantages

- Relatively simple to understand and implement.

- Provides good compression for many types of data, especially when symbol probabilities vary significantly.

- It's a prefix code, meaning no codeword is a prefix of another, allowing for unambiguous decoding.

Disadvantages

- Not always optimal: Huffman coding generally produces more optimal (i.e., shorter average code length) prefix codes. The way Shannon-Fano divides the symbol sets might not always lead to the best possible code lengths.

- Ambiguity in division: The step of dividing the symbols into two groups with nearly equal probabilities can sometimes have multiple solutions, leading to different sets of codes with potentially different efficiencies.

Applications and Relevance

While Huffman coding has largely superseded Shannon-Fano in many practical applications due to its optimality guarantee, Shannon-Fano coding remains historically significant:

- It was a pioneering algorithm in the field of information theory and data compression.

- It's used as an educational tool to explain the concepts of variable-length coding and entropy encoding.

- It was used in the IMPLODE compression method within the .ZIP file format.

Understanding Shannon-Fano helps appreciate the evolution of statistical compression techniques and the fundamental principles that govern how data can be efficiently represented. For further reading, you might explore the GeeksforGeeks article on Shannon-Fano or delve into its Wikipedia page.